Analogue to digital (A-D) or digital to analogue (D-A) conversion is a very important concept you need to understand if you are working with real instruments and recording them on a computer or device. This article is intended to be an introduction to the topic and should help you achieve a good practical knowledge about the subject.

Firstly, what is sound?

Many things that happen will produce sound – an explosion, hitting something with a hammer, or a sneeze are all possible examples! There is a physical disturbance and some of the energy expended in this movement is translated into a sound wave. This sound wave propagates through the air by the movement of air molecules, finally arriving at our ears making our eardrums vibrate and allowing us to hear the source. As the ancient Chinese proverb says, ‘if a tree falls and there’s nobody around to hear it, does it make any sound?’. Well, yeah, it does.. Just as pretty much everything else you can think of does. If you play a musical instrument or sing then you are the source of the sound, creating vibrations in the air which then propagate out to whoever is listening. It’s this ‘musical’ kind of sound that we are looking to capture in our recordings.

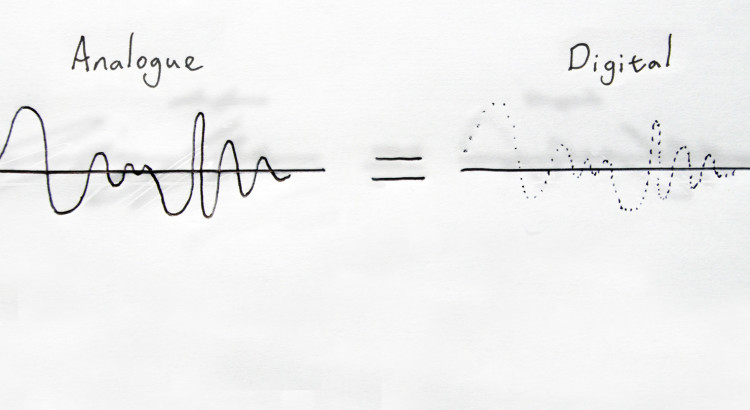

What’s the difference between analogue and digital?

Sound, that you hear, is analogue by definition! It’s a real world disturbance in the air that moves and has physical properties such as speed and amplitude. Digital on the other hand, is a collective term used to describe the way that we can now store data (sound in this case) as a series of numbers. We can very accurately represent a sound wave in digital by capturing an electrical representation of the displacement of the air, and then digitally sampling the resulting voltage many times per second. More of that later.

How is sound captured?

Sound can be captured by microphones which contain a diaphragm which will vibrate in the same way that your ear drum does (everything is still analogue at this stage). In a regular dynamic microphone, a coil of wire attached to that diaphragm moves over a magnet and results in a current in the wire. This is called ELECTROMAGNETIC INDUCTION. Because the diaphragm is moving backwards and forwards many times per second, the current also changes direction at the same rate and this is what we call an ‘alternating current’ or AC.

This current is an electrical representation of the sound that was ‘heard’ by the microphone and results in a voltage between the two terminals in the microphone. If this microphone is attached via a cable to a mixing desk, then the desk will ‘see’ an alternating voltage, and this, we call the signal voltage. When people talk about receiving a signal at the mixing desk, what they really mean is that there is a series of voltage changes happening across the ends of the wires in the microphone cable that is plugged in!!

What then happens to this signal?

In a regular analogue recording console or mixing desk, the signal will be processed and then amplified to go to speakers. If the microphone is plugged in to a digital recording device (a computer probably), then the analogue signal (voltage) is converted to a digital signal by the analogue to digital or A-D converters and the fun begins!

What are those converters called again? And how do they work?

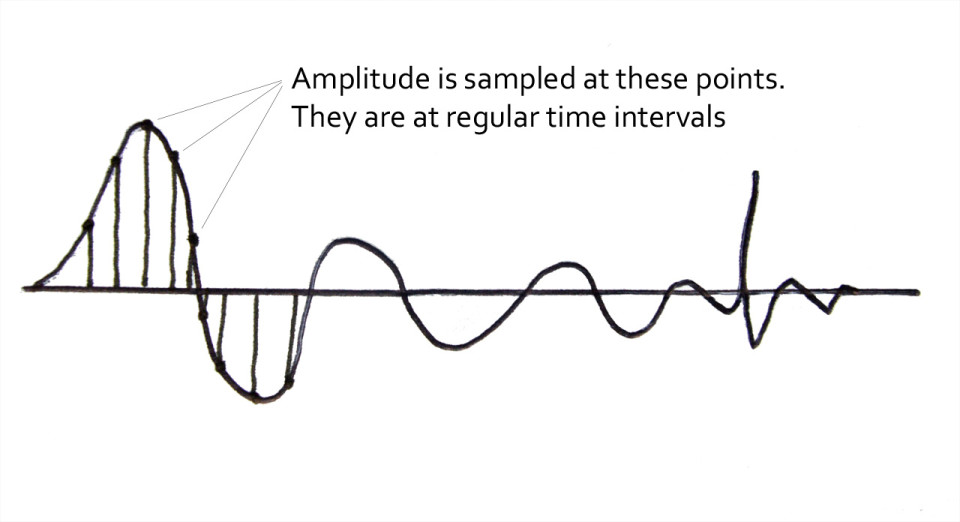

Analogue to digital converters (A-D converters) do exactly what they say. They detect the signal voltage and then allow your software to sample the amplitude of this voltage a pre-determined number of times per second. The more times per second the amplitude is recorded, the more accurately the analogue signal is represented. You can change settings on your computer that will dictate how many times per second the voltage should be sampled. The number of times the amplitude is sampled is called the sampling frequency.

We normally use 44100 times per second or 48000 times per second (44.1kHz or 48 kHz). In the diagram below, the points on the waveform represent the points where the amplitude is sampled.

In what format is the digital data stored?

You tell the computer how many times per second you would like the waveform’s amplitude to be measured. This is called the sampling frequency. At each interval, the amplitude of the signal voltage will be stored in your audio software. So if your sampling frequency is 44,000 times per second (44kilohertz) then there will be 44,000 discreet numbers stored for each second of mono audio that you record. That’s a lot of numbers!

These amplitude values range from zero up to the largest number that can be stored digitally. The value at each interval must lie between these two values and the amount of available numbers is determined by the bit depth. The higher the bit depth, the more available values and the more accurately the sound wave can be represented. As we reduce either the bit depth of the sampling frequency, we start to introduce digital artifacts that can be easily heard as we really reduce the values.

What’s that sampling frequency thing again?!

MATHS ALERT ——-Danger Danger!

This is where we should try to understand the principle of the Nyquist frequency. In simple terms, this states that your minimum sampling frequency should be twice that of the highest frequency we want to capture. Human hearing is often quoted as ranging from 20hz up to 20,000hz. Hence the lowest sampling frequency we might use would be double the uppermost frequency (so 20,000 * 2 = 40,000hz). In practice the frequency often used is 44,100hz or 44.1khz. The higher the sampling frequency used, the more we are able to capture the higher frequencies that might be present in the sound. We need another article to explore this further so for now, we have sampled enough times per second to be able to accurately represent the sound..

So..after the sound is recorded, what can we do with it?

If you hit play on your computer after recording something, the digital data will then be converted back to an analogue signal that is sent to your speakers so you can hear it. This is a digital to analogue conversion and is taken care of in real time by the D-A converters. Any processing that is applied digitally is obviously passed through and converted back to analogue.

Summary

We capture analogue sound and can store it ‘as heard’ as digital data on a computer. We can process this data in any number of ways, auditioning our changes in real time by the constant conversion of this digital data back in to analogue sound by our converters.

So the next time you hit play on your favourite DAW, remember that you are not actually hearing what you see on the screen, but are in fact hearing the analogue conversion. The continuous analogue waveform produced by your speakers is a version of your digital recording. The spaces in between the digital points have been ‘interpolated’ by the computer – think of this as the gaps in the digital representation being ‘filled in’ so that we can hear a continuous analogue waveform again. Pretty clever right?!